I’d like to share some of the things we’ve done to improve our AWS and Public Cloud Security posture. This was as much a cultural effort as it was a technical and security effort. Like all security/culture things, YMMV.

When I returned to Turner in my Cloud Security role I knew we had our work cut out for us. We had about 30-40 AWS accounts across three payer accounts (due to acquisitions etc). 75% of our spend was in 7 of those accounts, and those accounts were divided up by major business line (Sports, Entertainment, News and Corporate). These multi-tenant accounts were used by multiple teams and there was no enforcement of any kind of standard.

The first thing we needed was individual accountability, something we didn’t have with our multi-tenant setup. We moved from major business lines with no clear org-chart delineation to many accounts each with a VP or Senior Director assigned as the Executive Sponsor. We used an Internal Audit finding regarding user access as the impetus to force someone to take ownership of all the accounts. What no one took ownership of we shut down.

Second thing we did was force all account owners to give the security team audit permissions in all accounts. This became the basis of an AWS Inventory System which gathers data on all accounts and caches it as json and csv in S3.

Third thing we did was actually put a Cloud Security Standard down on paper. This defined the basics rules and recommendations called for by the Shared Responsibility Model. Basic things like:

- Use MFA

- Don’t leave your buckets open for the world to read or write

- Don’t open 22 or 3389 to 0.0.0.0/0

- Encrypt like everyone is watching

- Tag stuff

- Rotate keys

The Cloud Security Standard also put down on paper that the Executive Sponsor was the accountable individual for all things Security and Financial in each AWS account. We circulated drafts of the standards to all the major stakeholders, incorporated feedback and clarified language where their responses differed from our intent.

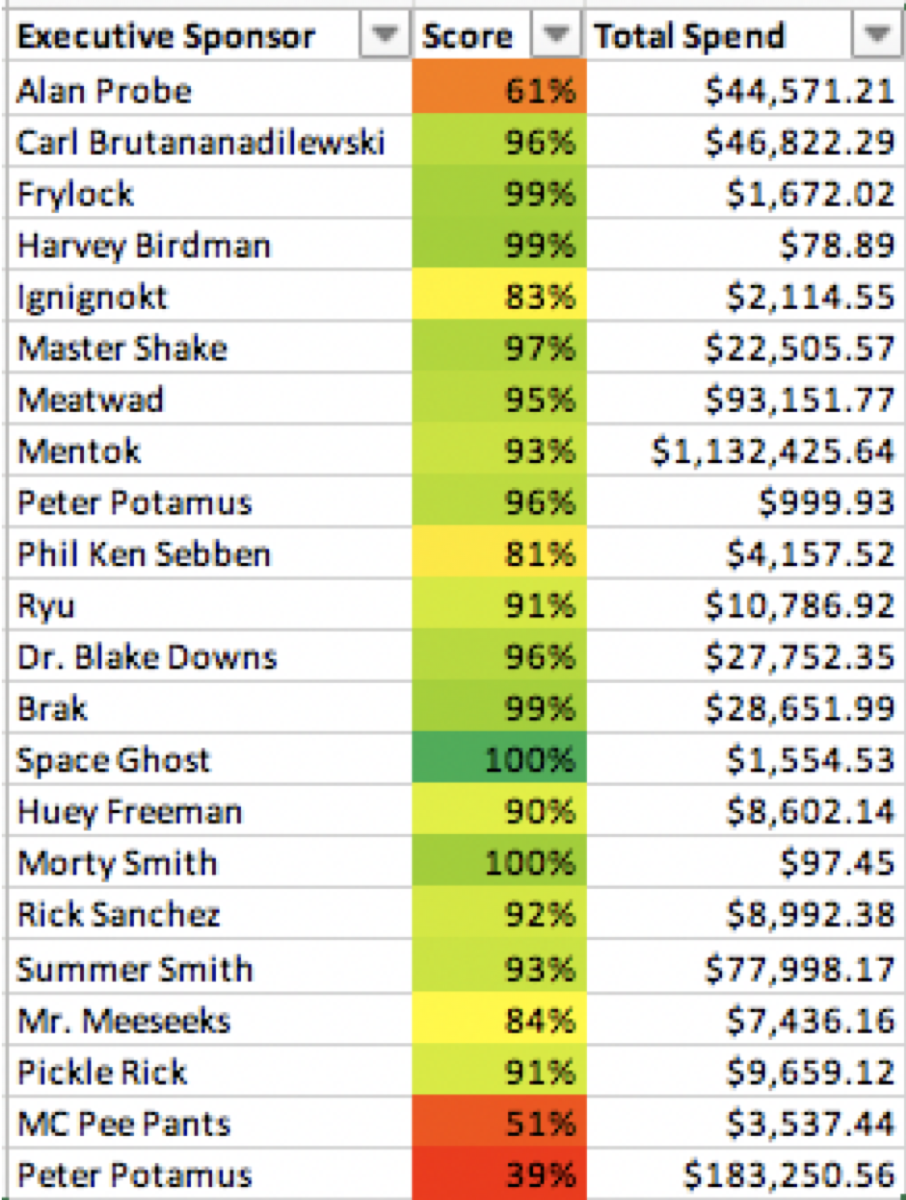

The power of the cloud (from a security perspective at least) is the API, and the API allowed us to measure everyone against the standard. That task fell to me (and one awesome intern). As you can tell from this site, I have no web-design ability. But that wasn’t a major issue because our Executives like colors. Primary Colors. A simple method of grading an account on a 0-100% scale and a color gradient from red to green was ideal for them and Excel spreadsheets (built in openpyxl) were a perfect solution.

The power of the cloud (from a security perspective at least) is the API, and the API allowed us to measure everyone against the standard. That task fell to me (and one awesome intern). As you can tell from this site, I have no web-design ability. But that wasn’t a major issue because our Executives like colors. Primary Colors. A simple method of grading an account on a 0-100% scale and a color gradient from red to green was ideal for them and Excel spreadsheets (built in openpyxl) were a perfect solution.

With spreadsheets that grade executives we distilled a complex problem into an executive view for the CISO and CTO.

Each executive had their own scorecard reflecting their own AWS Accounts. It gave them their score on each requirement in the Cloud Security Standard, and in a separate tab, the list of resource IDs that the system determined were non-compliant. This became each team’s security punch-list. We also integrated security policy exceptions into the scorecards. For example: While FTP is prohibited by the standard, it’s commonly used in the media industry. If a team has to use FTP, we discuss with them their mitigating controls, make sure they’re using passwords that aren’t sensitive, and we then grant an exception on that security group id. The excepted security group id still appears on the non-compliant resources tab, but it’s highlighted as accepted risk and their score is adjusted to reflect that. Thus, even when we have a condition that the tools thinks is insecure, we acknowledge that and not leave that requirement in the red. There is no reason why an executive’s score cannot be 100%.

When we released the scorecards in Spring the average AWS account was 49% compliant with the standard. By November we were at 87%. In that time we added over 100 AWS accounts.

The mechanics of how we built the scorecards is the topic of my re:Invent Chalk-Talk (which unfortunately won’t be recorded). It will also be the topic of a future post, and my goal is to release the code for both inventory and scorecards as part of a project I’m working on. Additionally we’re rolling out this same process for our Azure and GCP footprints, and will leverage the same code running in AWS for those other public cloud providers.

Feel free to reach out via LinkedIn or Twitter if you would like more information on what we did or how we did it.