What is Macie?

Amazon’s marketing describes Macie as:

Amazon Macie automates the discovery of sensitive data at scale and lowers the cost of protecting your data. Macie automatically provides an inventory of Amazon S3 buckets including a list of unencrypted buckets, publicly accessible buckets, and buckets shared with AWS accounts outside those you have defined in AWS Organizations. Then, Macie applies machine learning and pattern matching techniques to the buckets you select to identify and alert you to sensitive data, such as personally identifiable information (PII).

When it launched Macie was super expensive: $8 per GB of data scanned. Macie was an AWS acquisition so it was not created under the Bezos “API or Die” memo. It was incredibly difficult to setup. There was no way to collect cost estimates without clicking into each account and each region to get the estimate from the console. It had to turn on its own CloudTrail triggering additional costs there too. When I looked at what I could use it for in the 100 or so accounts I was responsible for at the time (2018) it was a total non-starter.

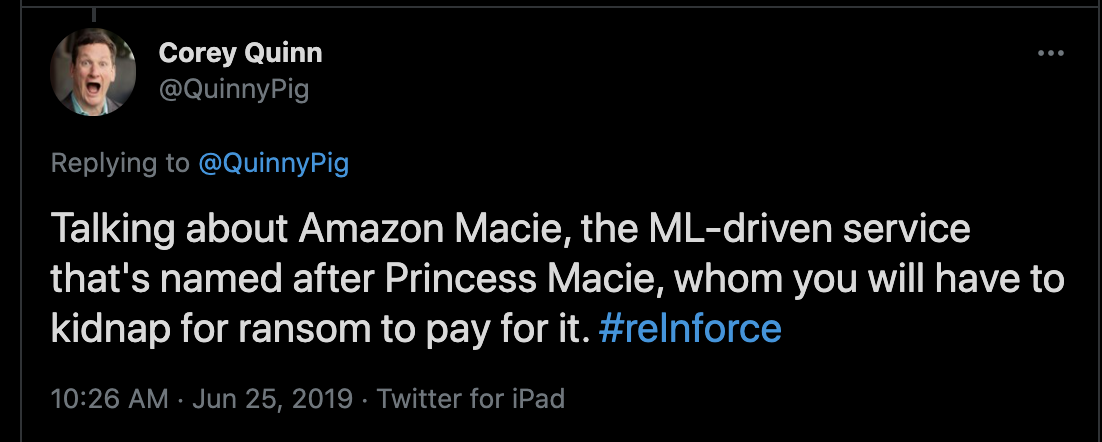

It became the butt of internet jokes.

Why do I care?

PII is like nuclear waste. Get too much of it in one place and you’re likely to have a melt down. It’s useful if you’re a major bank that accidentally left 100m credit card records in an account with web applications. S3 is not a proper secrets store, and Macie knows how to find certain credentials

What’s changed?

Macie has come a long way from the crap they released in 2018. Macie now supports Delegated Admin like the GuardDuty service. It has a proper Boto3 API (under the Macie2 namespace). There was an 80% price reduction last year. Additionally they added filter criteria, so you can create sensitive data classification jobs on public buckets only. The Macie console, while still only regional, provides a reasonably nice executive graph on your S3 Bucket Security - showing percentage of publicly readable, publicly writable, and externally shared buckets.

How can I deploy it effectively?

At $1 per GB Macie classification is still 33 to 250 times more expensive than the cost to store the data. That’s a tough sell to finance. Public buckets are an obvious candidate for scanning and it sets up a positive incentive for teams, who will bear the cost in their AWS accounts, to leverage better practices such as CloudFront with Origin Access Identity. “Fix your buckets or pay for Macie” could be a motivator in your organization.

I wrote some scripts to help you get started with Macie.

The first thing you’ll need to do is enable Delegated Admin. Pick an AWS account in your org where all the Macie findings will be sent. Consider access controls around the team that is going to have to review and triage the findings when selecting that account. Here is a script to help you

Once the AWS Payer account has delegated to the Macie account (which has to be done in each enabled region), you need to configure Macie for all child accounts in all regions. Note: AWS charges $0.10 per bucket per month to review configurations and conduct object inventories. This should not break the bank and is necessary to get estimates on the cost of doing data classification on buckets. This script will configure Macie for all regions in the Macie admin account. You’ll need to create a bucket and KMS CMK in your primary region (us-east-1 for me) for storing the archived findings. You do not need a bucket and key for each region. Macie will save findings across regional boundaries.

Once this is done, you probably should wait a day in order for Macie to do it’s thing with bucket and object discovery. You’ll want AWS to have gathered that info before you try and estimate your costs.

Next step in the process is to get an estimated cost. There are a few ways to do this. If you start to create a job in the Macie Console the console will calculate an estimated price before you create the job, but you need to do this 16+ times for each region. Alternately, I have a script that can give you an estimated cost for all public buckets, or on a per bucket basis. The script will run in all the regions and provide a global cost estimate.

Now that you have an initial idea of the cost to scan all the public buckets in your org, you can get the necessary approvals for the next step - creating the initial scan job.

Again, I’ve created a script to create initial scan jobs for all public buckets. This script as a safety mechanism and you must pass the appropriate flag in order to actually create the jobs that could bankrupt your company (see --help for more info).

Scan jobs come in two flavors, ONE_TIME and SCHEDULED. The script is configured to create one-time jobs for the initial triage of public buckets, and then a weekly review of all new objects added since the last time the bucket was scanned. One Time jobs can be created for all existing public buckets, or new buckets you’re looking to review before they are made public.

Once you’ve created your scan jobs, you’ll want to review the job status, review the findings per bucket and keep an eye on the actual costs.

Dealing with the findings

Once you’ve setup Macie, you need to do something with the findings. There will be a lot, and many will be false positives. Feeding them to Splunk or a SIEM isn’t a bad solution, but you’ll need to work on fine tuning those queries. Macie, like GuardDuty will publish an event to CloudWatch Events (aka EventBridge) and it was trivial to re-purpose my code to feed GuardDuty to Splunk to support Macie findings (on a different index of course).

You’ll end up with two types of findings, one related to the configuration of the bucket (called Policy:IAMUser for some weird reason), and the other for Sensitive Data findings (called SensitiveData:S3Object).

- This page is a good reference for how Macie determines a finding’s severity level

- Breakdown of the json event you’ll get for a finding

- Macie actually does a halfway decent job telling you where in the document it found bad things. From their docs:

To help you locate an occurrence of sensitive data, a finding can provide details such as:

- The column and row number for a cell or field in a Microsoft Excel workbook, CSV file, or TSV file.

- The line number for a line in a non-binary text file other than a CSV or TSV file, such as an HTML, JSON, TXT, or XML file.

- The page number for a page in an Adobe Portable Document Format (PDF) file.

- The record index and the path to a field in a record in an Apache Avro object container or Apache Parquet file.

This data is buried in the json structure and requires a decent set of conditional logic to display a clean report. That may become a future part of my Macie scripts.

What’s next

Next up for me is to come up with a good process for reporting findings at scale. As I’ve described in previous posts, reporting visibility to executive account owners has worked well for me in the past. I envision a scorecard with a “delete this object or prove it isn’t sensitive” as a way to remediate. Measuring compliance will be hard as Macie doesn’t close a finding once the object has been deleted. It will be a non-significant engineering effort to take the list of findings and assume role to ensure the object in question has been deleted.

…