The Origin Story

This adventure began, like most do, with wizard crashing a party:

Turns out, Jerry had been doing some open-bucket discovery and found several with the patten of letters “cnn”. At the time we had somewhere around 80 AWS accounts and our financial tool didn’t seem to find any hits. As we’d been building out the concept of the Security Account we had the ability to go cross-account to list all the buckets, so I wrote a small script to do just that. Turned out one was ours, another was someone trying to figure out how to break our CNN Go app, and the rest had nothing to do with CNN.

Around that time our auditors decreed “Thou shalt attest everyone who has access”. We needed the ability to discover what IAM users were in every account, what identity provider was in use, and what IAM roles AD groups were attached to.

Finally we were in the middle of the merger, and our legal department asked: “Can you provide all external IP addresses in use at the company?”

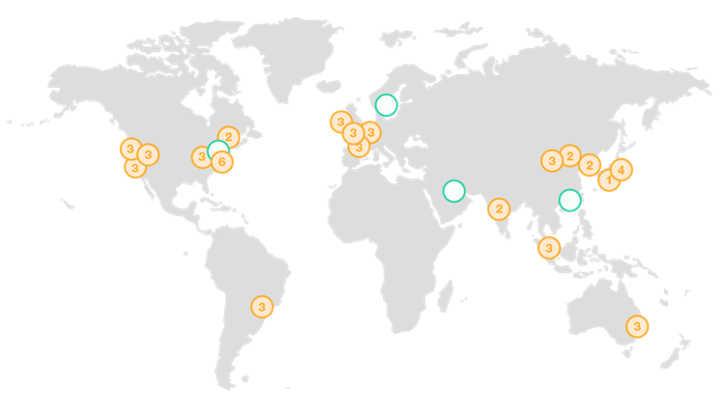

Fundamentally, the issue with AWS is that each AWS account is it’s own isolated bubble (or it was 4 years ago) with nothing more than a parent account for enterprise invoicing. Each account had it’s own login, and resources could be anywhere in the world

16 regions and 250 accounts is 4000 individual consoles you need to visit to find the resource in question.

The need for inventory

We knew we were planning to expand to an “Oprah account strategy” and our account count would only be increasing. We already had three different AWS Organizations (and we added another during the process). Each team was doing its own thing. Some were all EC2, some ECS, some were running their own container orchestration. One team decided they needed to create an AWS Account per S3 Bucket.

We knew we were planning to expand to an “Oprah account strategy” and our account count would only be increasing. We already had three different AWS Organizations (and we added another during the process). Each team was doing its own thing. Some were all EC2, some ECS, some were running their own container orchestration. One team decided they needed to create an AWS Account per S3 Bucket.

Security Operations was having issues too. They would get alerts about IP addresses and not know who to contact. Our ITIL team was focused on the on-prem systems and we didn’t have a way to manage account ownership.

We were also using all the cutting edge AWS services. Our financial reporting tool didn’t inventory Lambda. AWS Config didn’t support SageMaker or managed ElasticSearch.

What we did

I’ve spoken & blogged about Antiope in the past. The tool’s premise is simple: leveraging AWS Lambda, StepFunctions and Cross-account IAM Roles, discover all the AWS accounts in all the known payer accounts. This data is ingested into both ElasticSearch & Splunk for report generation and ad-hoc querying. Unlike AWS Config Service, Antiope captures all the resource types of interest, not just the core compute, networking and storage ones.

Antiope will take as an input a list of payer accounts. This allows us to scale as the organization scales and doesn’t require different tooling for each acquired group.

The primary account table allows us to add arbitrary attributes, so our ownership records, data classifications, ServiceNow records, etc, can all be aggregated from different systems into one place.

Internally, we’ve extended the model to run custom functions and reports. We ingest 85% of our account ownership data from various ServiceNow instances. We generate reports that describe the health of our security tooling. And we provide a webpage to incident response with the list of all our accounts, the escalation contacts, and a preformed cross-account-role link they can use from the security account to pivot into any of our accounts for investigations.

What this has Enabled:

Full list of all our cloud accounts

As part of the inventory, we run organizations.list_accounts() on every payer. New accounts are automatically discovered. We have a json file that can be consumed by all our other processes that list the accounts and who owns them.

As the business has changed, we’re required to interface with more and more systems-of-record for cloud data. Antiope has allowed us to aggregate all that data into a single location for dissemination to the other security platforms we run.

Automated deployment of security controls

Whenever a new account is created, it triggers a message to the new account topic. This allows us to integrate external security tooling and (prior to the release of GuardDuty for Organizations) enable GuardDuty and subscribe it to a master account.

Scorecards & Compliance Reporting

The Scorecard system leverages Antiope’s account inventory and ownership data to build the compliance scorecards. We’re now feeding that data into the enterprise vulnerability management tool, and pulling in all the EC2, S3 Bucket and IAM metadata to help rank risk better.

Engineering support - finding who is who

One side effect of having all our Antiope data in Splunk is that we can quickly answer the question “Anyone know what IP 52.192.87.16 is?". We can quickly respond “It’s a NatGateway in ap-southeast-1 for vpc-12345 in Victor Laszlo’s account”. We end up being the go-to for the help desk when they don’t know how to route a DNS update that’s hosted in Route53.

Threat Hunting

Antiope is useful for ad-hoc Threat Hunting. Need to know if an Elastic Search Cluster is public? Add that service to the inventory and an hour later the data is available to search? Get notice of a RCE Zero-Day in SaltStack - run a query to see what security groups have ports 4505 and 4506 open. Or search against a specific Marketplace AMI.

Security Operations uses cross references resources discovered with Antiope for tag meta-data and ownership.

Support Tickets

Ever get one of these notices?

As a security team, are you sure you’re getting all the notices? We added pulling in all the support tickets and now we can alert on the FIXME

IP Protection

Other teams have reached out to use this too. We provide a report to legal on all the Route53 Domains registered in the organization. We could block this service and “require” everyone to register via legal, but legal doesn’t have a workflow queue and teams are as likely to use GoDaddy as they are to follow the process. With Route53’s ease, we have total visibility into domain registrations and can make sure Legal sees and updates what’s being registered.

Lessons learned

“Architect for the AWS you have, not the AWS you want” – Donald Rumsfeld

Build vs Buy

I’m not sure we ever consciously chose to roll our own inventory tool. The first iteration was based solely on scripts I was building to answer questions the business was asking. When it became clear that maintaining these ad-hoc scripts wasn’t going to work, I revamped the workflow and built Antiope. SAY MORE

API Exhaustion

This is a term I’ve coined, but it’s becoming a concern with our environment. Every account has two financial reporting tools, ServiceNow, Antiope, CloudSploit, an optimization tool, CloudCustodian, etc. Each one of these tools is listing and describing on a regular basis. This generates a lot of CloudTrail events (which increases bills like GuardDuty), and could theoretically cause issues with API Limits

Future

Right now, Antiope is complex to install. We’re working to break that down to leverage nested stacks and SSM Parameter Store for a more one-click deployment.

Antiope for Azure and Antiope for GCP will be released shortly. Azure will take a json file of service principals and (if granted read access at the root ResourceGroup) discover all the Subscriptions in each Tenant. It will also find all of the Virtual Machines in each Tenant. GCP is similar, if given credentials at the root of a organization, it will auto discover all the projects.

We’re hoping to release the scorecard system as part of Antiope his summer. And we’re working on a new concept, the Antiope Perimeter Scanner. The idea behind this tool is conduct assessments, real-time, against cloud assets we know belong to us. It will help risk-rank the security groups that are open to 0-65535 (leveraging nmap), or provide a list of objects that are list-able and readable in open buckets.